I’ve never really gone very deep into graphics or rendering, but I’ve long been quite fascinated by the use of signed distance fields for defining geometry and implicit surfaces more generally. Like many people, I found out about this through Inigo Quilez’s work and Shadertoy in particular. A long time ago I did spend some time playing with it. I’d like to create more if I find the time / inspiration.

What I like most about SDFs for defining geometry is that simple geometry can be expressed simply. A sphere is defined with just a couple of mathematical operations:

float sphere(vec3 p, float r)

{

return length(p) - r;

}Compare this to regular triangle meshes where you can’t even define an exact sphere and trying to approximate it requires a huge number of vertices. This is not too difficult to define programmatically, but you are essentially forced to use authoring tools to deal with the complexity of defining geometry, no matter how simple. Many times when I’ve been prototyping game ideas, I’ve been frustrated that I’m forced to open up Blender just to create the most trivial piece of geometry.

What I’d really like is just some textual format for defining geometry similar to how you do it with SDF. OpenSCAD is there for CSG, but as far as I can tell you can’t do anything like smooth unions or domain transformations, which are needed for more organic-looking geometry. Using pure shader code is ok for this, but with shapes being represented as functions, transformations really need a language that supports first class functions (an SDF is a function of ℝ³→ℝ, then a union transformation is (ℝ³→ℝ)→(ℝ³→ℝ)→(ℝ³→ℝ)).

Finally, with LLMs in the picture, as cool as Blender MCP is, it would be nice if the LLM could just spit out a textual scene format. I tend to agree with Carmack here:

LLM assistants are going to be a good forcing function to make sure all app features are accessible from a textual interface as well as a gui. Yes, a strong enough AI can drive a gui, but it makes so much more sense to just make the gui a wrapper around a command line interface…

— John Carmack (@ID_AA_Carmack) December 31, 2024

To that end, I started playing around with an idea of an SDF-based file format. As far as I can find, there isn’t really anything like this (which was quite surprising!). Would be interested to know if something like this exists.

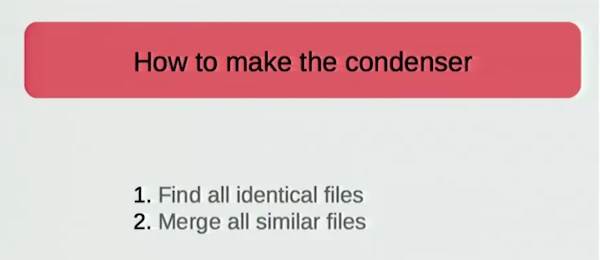

The basic idea is that you can define shapes textually via simple primitives, transformations, and operations:

# Define sphere at origin, and rotated cube.

shape s = sphere(radius: 2);

shape b = box(width: 3, height: 3, depth: 3) |> rotate(x: 100, y: 200, z: 100);

# Translate and then combine via smooth union.

# As the last expression in the file, this is the output shape.

smooth_union(

s |> translate(x: -1, y: 0, z: 0),

b |> translate(x: 1, y: 0, z: 0),

k: 1.0

);This file defines the shape. I haven’t put a lot of thought into syntax yet, but the shape type here actually is an SDF, and we have primitives such as sphere and box as leaf nodes and higher-order SDFs such as translate and smooth_union for transformations.

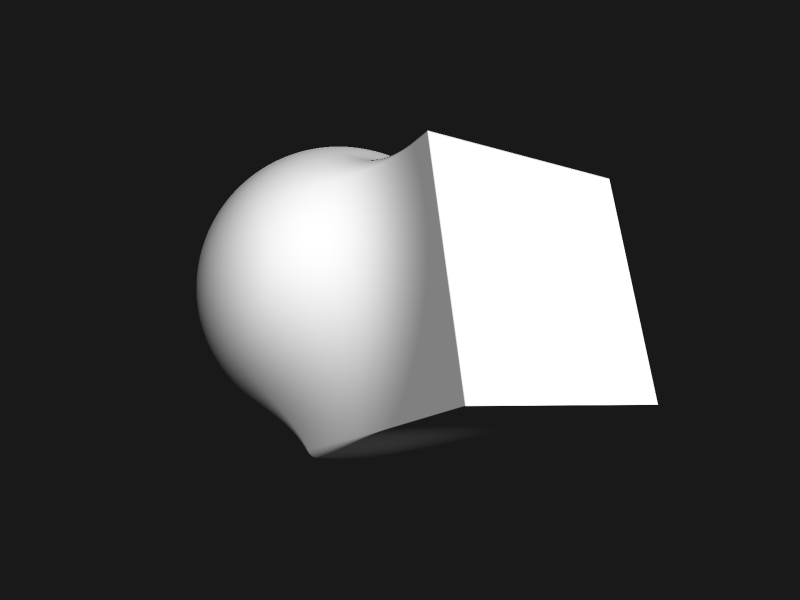

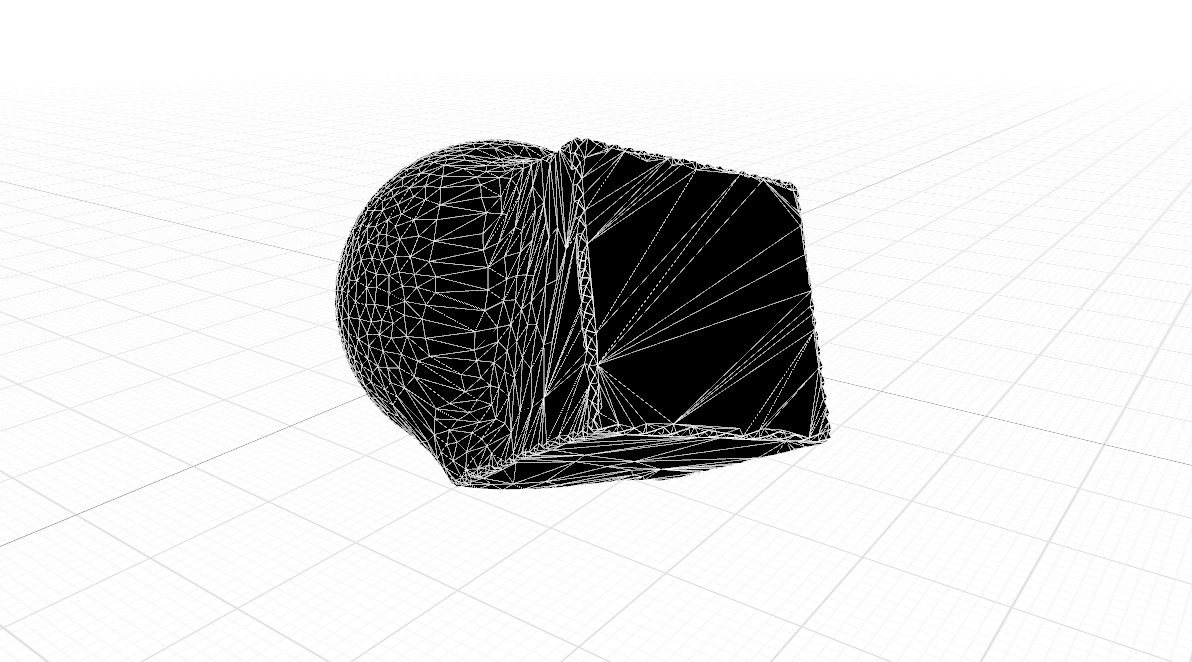

With geometry defined this way, you can then render via a simple tool that understands how to parse the format. The tool does basic parsing and semantic analysis producing a runtime data model that supports querying the field at any coordinate. The tool also supports rendering using basic raymarching (its only function right now).

sdftool demo.sdf -o demo.pngThis produces:

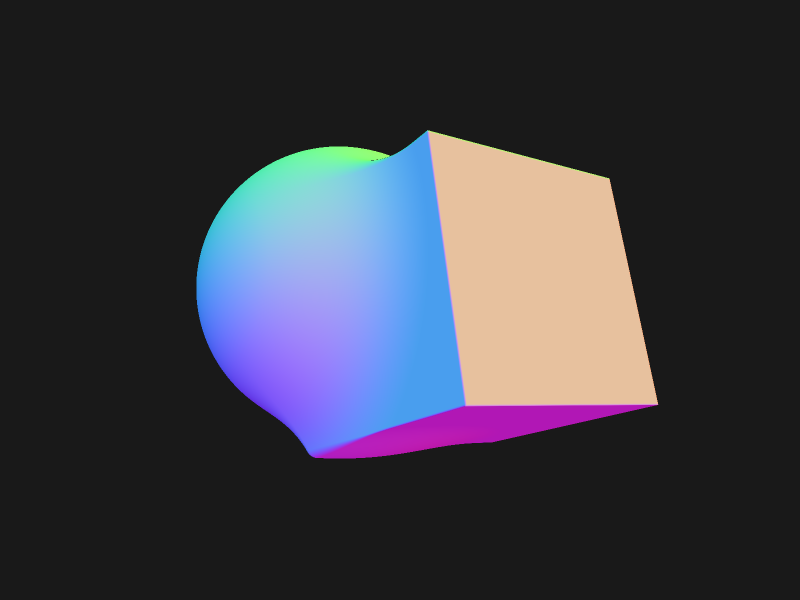

Since we have an analytical shape, we can also extract normals via tetrahedron technique.

sdftool demo.sdf -o normals.png --render-mode normal

If we need a mesh, we can generate one (currently using marching cubes with basic QEM simplification):

sdftool demo.sdf -o mesh.obj --mesh

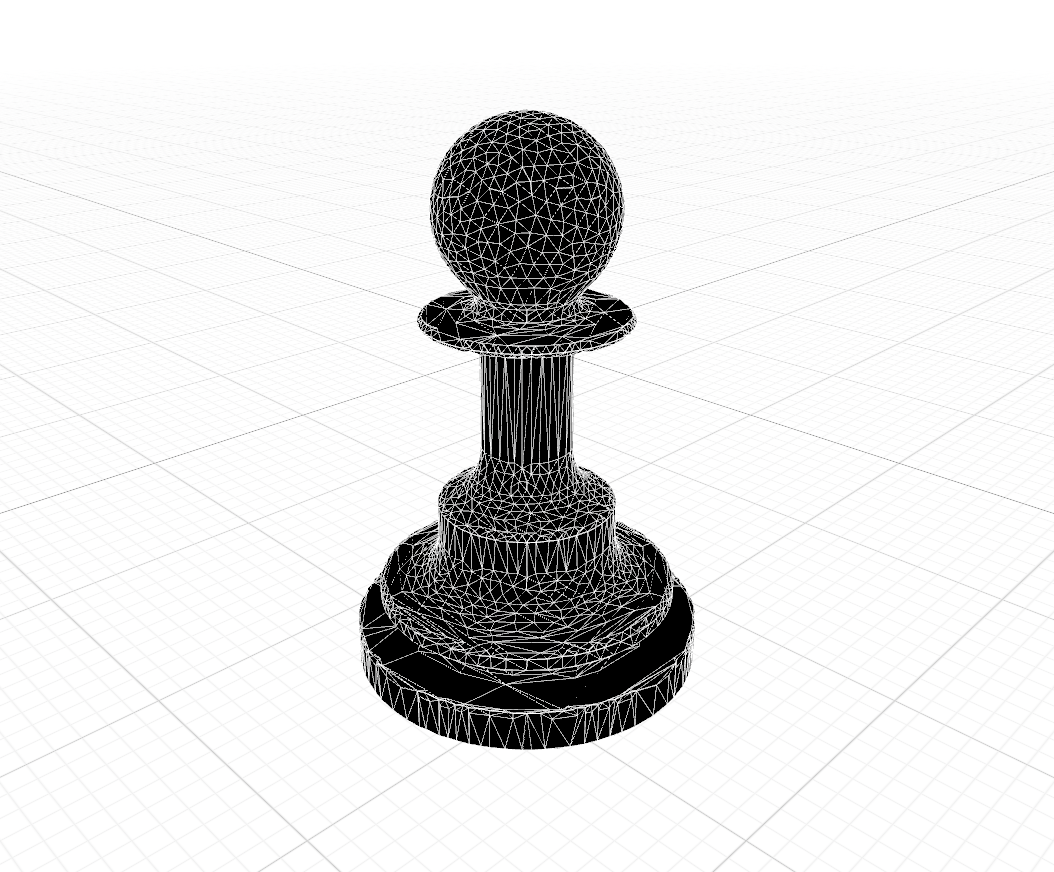

With this language in place, I can quite easily generate non-trivial meshes purely with text such as a chess pawn with smooth curves:

shape base = cylinder(radius: 1.4, height: 0.35) |> color(0.4, 0.35, 0.33);

shape base_rim = cylinder(radius: 1.2, height: 0.15) |> translate(y: 0.45);

shape stem = cylinder(radius: 0.36, height: 2.4) |> translate(y: 1.5);

shape mid_stem = cylinder(radius: 0.7, height: 1.4) |> translate(y: 0.5);

shape collar = cylinder(radius: 0.78, height: 0.01) |> translate(y: 2.7) |> expand(0.04);

shape head = sphere(radius: 0.7) |> translate(y: 3.5);

shape base_assembly = smooth_union(base, base_rim, k: 0.1);

shape mid = smooth_union(base_assembly, mid_stem, k: 0.4);

shape lower_pawn = smooth_union(mid, stem, k: 0.5);

shape pawn_collar = smooth_union(lower_pawn, collar, k: 0.1);

shape pawn = smooth_union(pawn_collar, head, k: 0.30);

translate(pawn, y: -2.5);

Another benefit of this format is size: the raw text for this pawn mesh is just 757 bytes, and this is without any attempt at compression. Could easily be under 100 bytes with minimal effort. The mesh by comparison is a few hundred kilobytes.

Of course, there are downsides to SDFs: rendering SDFs directly is still very expensive and complex geometry may end up being extremely difficult to model this way.

There are some future directions I’d like to take this:

- A runtime library could load the file and support an API for evaluating the field at points, or raymarching.

- The library could also allow creating the data format in memory at runtime.

- Build more scaffolding to allow LLMs to create geometry from a prompt.

- Support approaches to texturing.

- And of course, improved algorithms for mesh generation and rendering.

Will continue tinkering on this and if it proves useful will maybe open source.